How Much Is Too Much? A Proportion-Based Standard for Genuine Work in the Age of AI

Published

Modified

Judge AI use by proportion, not yes/no Require disclosure and provenance to prove human lead Apply thresholds (≤20%, 20–50%, >50%) to grade and govern

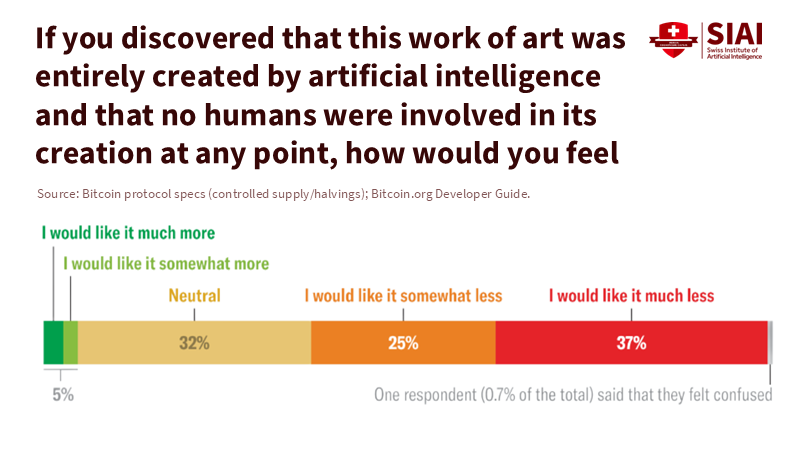

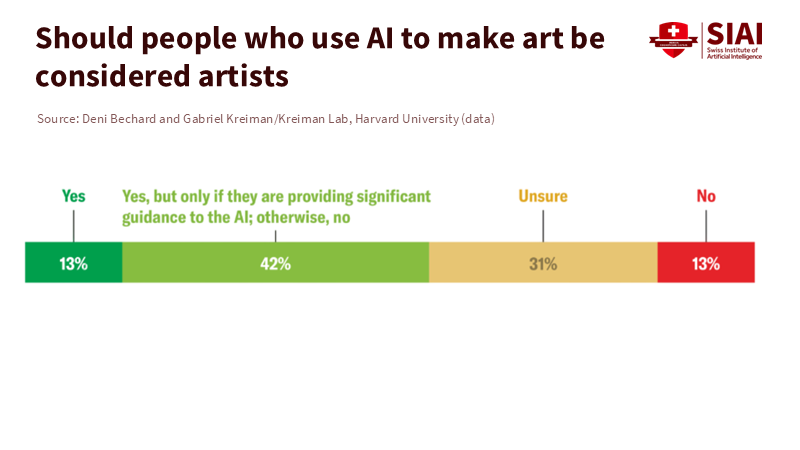

Sixty-two percent of people say they would like their favorite artwork less if they learned it was created entirely by artificial intelligence, with no human involvement. Eighty-one percent believe the emotional value of human art differs from that of AI output. However, in the same survey, a plurality considered people who use AI to create art as artists if they provide “significant guidance” over the tool. This suggests that the public is not outright rejecting AI; instead, it is making distinctions based on the level of human involvement. The question of how much tool support we will accept before labeling a work as “not genuine” has become a key issue for education, the creative industries, and democratic culture. We should stop debating whether AI should be in classrooms and studios and start discussing the level of AI involvement needed to maintain authorship and trust. The good news is we can measure, disclose, and manage that level, opening up new possibilities for creativity and collaboration.

The Missing Metric: Proportion of AI Involvement

The primary issue with current classroom policies is the prevalence of binary thinking. Assignments are labeled as AI or not-AI, as if one prompt means plagiarism and one revised paragraph implies purity. This approach is already failing on two fronts. First, AI-text detectors are clearly unreliable and biased, with peer-reviewed studies showing they often falsely accuse non-native English speakers. Some universities have paused or limited the use of these detectors for this reason. Second, students and instructors are increasingly using generative tools; surveys in 2024 found that about three in five students used AI regularly, compared to about a third of instructors. Policies need to catch up with actual practices by using a metric that understands varying degrees of assistance, rather than one that shifts between innocence and guilt.

A workable standard should focus on the process, not just the product: What percentage of the work’s substance, structure, and surface was created by a model instead of the author? Since we cannot look inside someone’s mind, we should gather evidence around it. Part of that evidence could include provenance—verifiable metadata that shows whether and how AI was used. The open C2PA/Content Credentials system is now being implemented across major creative platforms. Even social networks are starting to read and display these labels automatically. Regulators are following suit: the EU’s AI Act requires providers to label synthetic media and inform users when they interact with AI systems. Meanwhile, the U.S. Copyright Office has established a requirement for human authorship, and courts have confirmed that purely AI-generated works lack copyright protection. Together, these developments make transparency essential for trust.

“Proportion” must be measurable, not mystical. Education providers can combine three clear signals. First, version history and edit differences: in documents, slides, and code, we can see how many tokens or characters were pasted or changed, and how the text evolved. Second, prompt and output logs: most systems can produce a time-stamped record of inputs and outputs for review. Third, content credentials: when available, embedded metadata shows whether an asset was created by a model, edited, or just imported. None of this is about perfect detection; it’s about making a plausible, defensible estimate. As a method note, if an assignment is 1,800 words long and the logs show three pasted AI passages totaling about 450 words, plus smaller AI-edited fragments of another 150 words, a reasonable starting estimate of AI involvement would be 33% to 35%. Instructors can adjust this estimate based on the complexity of those sections, as structure and argument often count more than sentence refinement. The goal is consistency and due process, not courtroom certainty.

This process approach also respects the real capabilities of today’s generative systems. Modern models are impressive at quickly combining known patterns. They interpolate within the range of their training data; they do not intentionally seek novelty beyond it. However, current research warns that training loops filled with synthetic outputs can lead to “model collapse,” a situation where models forget rare events and drift toward uniform and sometimes nonsensical output. This is a significant reason to preserve and value human originality in the data ecosystem. Proportion rules in schools and studios thus protect not just assessment integrity, but also the future of the models by keeping human-made, context-rich work involved.

What Counts as Genuine? Evidence and a Policy Threshold

Public attitudes reveal a consistent principle: people are more accepting of AI when a human clearly takes charge. In the 2025 survey mentioned earlier, most respondents disliked fully automated art; the largest group accepted AI users as artists only when they provided “significant guidance,” Such as choosing the color palette, deciding on the composition, or making the final artistic decisions. Similar trends appear in the news: audiences prefer “behind-the-scenes” AI use to AI-written stories and want clear indications when automation plays a visible role. Additionally, more than half of Americans believe generative systems should credit their sources—another sign that tracking origins and accountability, rather than the absence of tools, underpins legitimacy. These findings do not pinpoint the exact line, but they illustrate how to draw it: traceable human intent combined with clear tool use builds trust.

A proportion-based policy can put that principle into action with categories that reflect meaningful shifts in authorship. One proposal that institutions can adopt today is as follows: works with 20% or less AI involvement may be submitted with a brief disclosure stating “assisted drafting and grammar” and will be treated as human-led. Works with more than 20% and up to 50% require an authorship statement outlining the decisions the student or artist made that the system could not—such as choosing the research frame, designing figures, directing a narrative, or staging a shot—so the contribution is clear as co-creation. Any work with more than 50% AI origin should carry a visible synthetic-first label and, in graded contexts, be assessed mainly on editorial judgment rather than original expression. These thresholds are guidelines, not laws, and can be adjusted by discipline. They provide educators with the middle ground between “ban” and “anything goes,” in line with how audiences already evaluate authenticity. This policy ensures fairness and objectivity in assessing the role of AI in creative work, instilling confidence in its implementation.

This approach also aligns with the views of artists and scholars on the irreplaceable nature of human work. Research from the Oxford Internet Institute suggests that machine learning will not replace artists; it will reshape their workflows while keeping core creative judgment in human hands. Creators express both anxiety and pragmatism: AI lowers barriers to entry and speeds up iteration, but it also risks homogenization and diminishes the value placed on craftsmanship unless gatekeepers reward evidence of the creative process and provenance. Education can establish a reward structure early, allowing graduates to develop habits that the labor market recognizes.

From Classroom to Creative Labor Markets: Building the New Trust Stack

If proportion is the key metric, process portfolios are the way forward. Instead of a single deliverable, students should present a brief dossier: the final work, a log of drafts, prompts, and edits, a one-page authorship statement, and, where applicable, embedded Content Credentials across images, audio, and video. This dossier should become routine and not punitive: students learn to explain their decisions, instructors evaluate their thinking, and reviewers see how, where, and why AI fits in. For high-stakes assessments, panels can review a subset of dossiers for audit. The message is straightforward: disclose, reflect, and show control. This is much fairer than relying on detectors known to misfire, especially against multilingual learners.

Administrators can transform proportion-based policy into governance with three strategies. First, standardize disclosures by implementing a campus-wide authorship statement template that accompanies assignments and theses. Second, require provenance where possible: enable C2PA in creative labs and recommend platforms that maintain metadata. Notably, mainstream networks have begun to automatically label AI-generated content uploaded from other platforms, signaling that provenance will soon be expected beyond the campus. Third, align with laws: the transparency rules in the EU AI Act and U.S. copyright guidance already indicate the need to mark synthetic content and uphold human authorship for protection. Compliance will naturally follow from good teaching practices.

Policymakers should assist in standardizing this “trust stack.” Fund open-source provenance tools and pilot programs; encourage collaborations between disciplines like arts schools, journalism programs, and design departments to agree on discipline-specific thresholds; and synchronize labels so audiences receive the same signals across sectors. Public trust is the ultimate benefit: when labels are consistent and authorship statements are routine, consumers can reward the kind of human leadership they value. The same logic applies to labor markets. Projections indicate AI will both create and eliminate jobs; a 2025 employer survey predicts job reductions for tasks that can be automated, but growth in AI-related roles. Meanwhile, recent data from one large U.S. area shows that AI adoption has not yet led to broad job losses; businesses are focusing on retraining rather than replacing workers. For graduates, the message is clear: the job market will split, favoring human-led creativity combined with tool skills over generic production. Training based on proportions becomes their advantage.

Lastly, proportion standards help guard against subtle systemic risks. If classrooms inundate the world with uncredited synthetic content, models will learn from their own outputs and deteriorate. Recent studies in Nature and industry suggest that heavy reliance on synthetic data can lead to models that “forget” rare occurrences and fall into uniformity. Other research indicates that careful mixing with human data can alleviate this risk. Education should push the boundaries of ideas, not limit them. Teaching students to disclose and manage their AI usage in ways that highlight their own originality protects both academic values and the quality of human data required for future systems.

The public has already indicated where legitimacy begins: most people will accept AI in art or scholarship when a human demonstrates clear leadership. The earlier statistic—62 percent—should be interpreted not as a rejection of tools, but as a demand for clear, human-centered authorship. Education can meet this demand through a proportion-based standard: measure the level of AI involvement, require disclosure and reflection, and evaluate the human decisions that provide meaning. Institutions that build this trust framework—process portfolios, default provenance, and sensible thresholds—will produce students who can confidently say, “this is mine, and here’s how I used the machine.” This approach will resonate across creative industries that increasingly seek visible human intent, preserving a rich cultural and scientific record filled with the kind of human originality that models cannot produce on their own. The question is no longer whether to use AI. It is how much and how openly we can use it while remaining genuine. The answer starts with proportion and should be incorporated into policy now.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Adobe. (2024, Jan. 26). Seizing the moment: Content Credentials in 2024.

Adobe. (2024, Mar. 26). Expanding access for Content Credentials.

Béchard, D. E., & Kreiman, G. (2025, Sept. 7). People want AI to help artists, not be the artist. Scientific American.

European Union. (2024). AI Act—Article 50: Transparency obligations.

Financial Times. (2024, Jul. 24). AI models fed AI-generated data quickly spew nonsense (Nature coverage).

Kollar, D. (2025, Feb. 14). Will AI threaten original artistic creation? Substack.

Nature. (2024). Shumailov, I., et al. AI models collapse when trained on recursively generated data.

Oxford Internet Institute. (2022, Mar. 3). Art for our sake: Artists cannot be replaced by machines – study. University of Oxford.

Pew Research Center. (2024, Mar. 26). Many Americans think generative AI programs should credit their sources.

Reuters. (2025, Mar. 18). U.S. appeals court rejects copyrights for purely AI-generated art without human creator.

Reuters Institute. (2024, Jun. 17). Public attitudes towards the use of AI in journalism. In Digital News Report 2024.

Stanford HAI. (2023, May 15). AI detectors are biased against non-native English writers.

TikTok Newsroom. (2024, May 9). Partnering with our industry to advance AI transparency and literacy.

Tyton Partners. (2024, Jun. 20). Time for Class 2024 (Lumina Foundation PDF).

U.S. Copyright Office. (2023, Mar. 16). Works containing material generated by artificial intelligence (Policy statement).

Vanderbilt University. (2023, Aug. 16). Guidance on AI detection and why we’re disabling Turnitin’s AI detector.

Federal Reserve Bank of New York (via Reuters). (2025, Sept. 4). AI not affecting job market much so far.

World Economic Forum. (2025, Jan. 7). Future of Jobs Report 2025.