The Barbell Workforce: How AI Lowers the Floor and Raises the Ceiling in Education and Industry

Published

Modified

AI lowers entry barriers, raises mastery standards Novices gain most; experts move to oversight and design Education must deliver operator training and governance mastery

A quiet result from a very loud technology deserves more attention. When a Fortune 500 company gave customer-support agents an AI assistant, productivity rose by 14% on average, but it jumped by 34% for the least-skilled workers. In other words, the most significant early gains from generative AI appeared at the bottom of the ladder, not the top. This single statistic changes how we view the skills debate. Automation is not just about replacing routine jobs; it also helps novices perform at nearly intermediate levels from day one. In factories and offices, this creates a barbell labor market: easier entry for low-skill roles and a faster-moving ceiling for highly skilled workers. Education systems—secondary, vocational, and higher—must change to a world where AI lowers the competence floor and raises the standard for mastery. The policy question is not whether AI will change work; it already has. The question is whether our learning institutions will change at the same speed. The urgency and necessity of ongoing curriculum evolution cannot be overstated.

The New Floor: Automation Expands the Entry Ramp

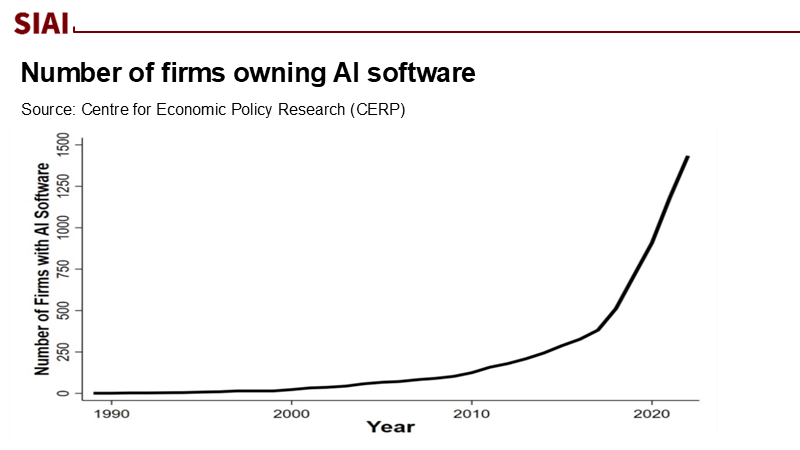

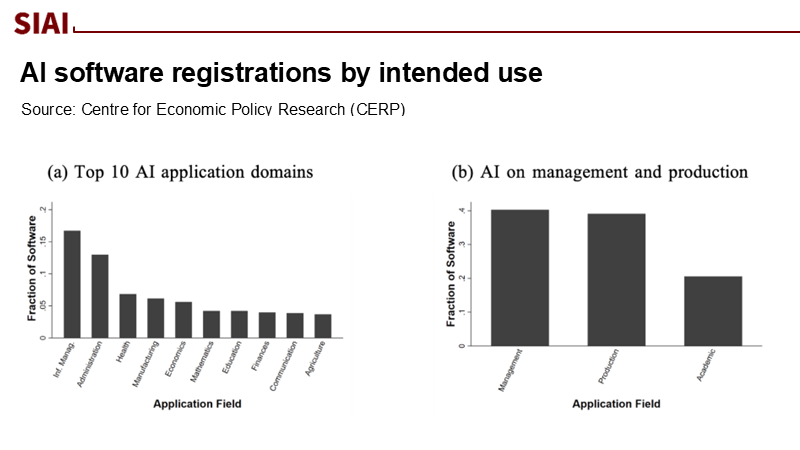

Evidence from large-scale deployments shows a clear trend. On the factory side, global robot stocks reached about 4.28 million operational units in 2023, increasing roughly 10% in a year, with Asia accounting for 70% of new installations. However, the new wave is less about brute automation and more about software that makes complex equipment understandable to less experienced operators. In administrative settings, AI replaces routine desk tasks; on shop floors, it often supports people by integrating guidance, quality control, and predictive maintenance into the tools themselves. Recent data show an overall shift towards higher demand for machine operators and younger, less-credentialed workers, even as clerical roles continue to shrink. This creates a two-sector split that policymakers cannot ignore.

The mechanism is straightforward. Generative systems capture the playbook of top performers and provide it to novices in real time. In extensive field studies, AI assistants helped less-experienced workers resolve customer issues faster and with better outcomes, effectively condensing months of on-the-job learning into weeks. This aligns with another line of research indicating that access to a general-purpose language model can reduce writing time by about 40% while improving quality, especially for those starting further from the frontier. These are not trivial edge cases or lab-only outcomes; they have now been observed in real workplaces across different task types. For entry-level employees, AI acts as a tutor and a checklist hidden within the workflow.

If this is the new floor, two implications arise for education. First, basic literacy—numeracy, writing, data hygiene—still matters, but the threshold for job-ready performance is shifting from “memorize the rules” to “operate the system that encodes the rules.” Second, AI exposure is unevenly distributed. Cross-country analysis suggests that occupations with high AI exposure are disproportionately white-collar and highly educated. However, this exposure has not led to widespread employment declines to date; in several cases, employment growth has even been positively linked to AI exposure over the past decade. This is encouraging, but it also means that entry ramps are being widened most where AI tools are actively used. Schools and training programs that treat AI as taboo risk exclude students from the very complementarity that drives early-career productivity. Educators and policymakers must ensure equitable access to AI, providing fair opportunities for all.

The Moving Ceiling: Why the Highly Skilled Must Climb Faster

It is tempting to think that if AI boosts lower performers the most, it lowers the value of expertise. However, the emerging evidence suggests the opposite: mastery changes shape and moves upward. In a study of software development across multiple firms, access to an AI coding assistant increased output by about 26% on average. Juniors saw gains in the high-20s to high-30s, while seniors experienced single-digit increases. This does not render senior engineers redundant; it raises the expectations for what “senior” should mean—less focus on syntax and boilerplate and more emphasis on architecture, verification, and socio-technical judgment. In writing and analysis, a similar pattern emerges: significant support on routine tasks and uneven benefits on complex, open-ended problems where human oversight and domain knowledge are crucial. The ceiling is not lower; it is higher and steeper.

This helps explain a paradox in worker sentiment. Even as tools improve speed and consistency, most workers say they rarely use AI, and many are unsure if the technology will benefit their job prospects. Only about 6% expect more opportunities from workplace AI in the long run; a third expect fewer. From a barbell perspective, this hesitation is logical: if AI handles standard tasks, the market will reward those who can operate the system reliably (new entry-level roles) and those who can design, audit, and integrate it across processes (new expert roles). The middle, where careers once developed over many years, is compressing. Education that does not teach students how to climb—from tool use to tool governance—will leave graduates stuck on the flattened middle rung.

For high-skill workers, the solution is not generic “upskilling” but specialization beyond the model’s capabilities: data stewardship, human-factors engineering, causal reasoning, adversarial testing, and cross-domain synthesis. Studies of knowledge workers show that performance can improve dramatically for tasks “inside” the model’s capabilities, but it can decline on tasks “outside” it if workers overly trust fluent outputs. This asymmetry is where advanced programs should focus: teaching when to rely on the model and when to question it. Think fewer assignments aimed at producing a clean draft and more assignments aimed at proving why a draft is correct, safe, and fair, with the model as a visible, critiqued collaborator rather than a hidden ghostwriter.

Designing a Two-Track Education Agenda

If AI lowers the entry threshold and raises the mastery bar, education policy should explicitly support both tracks. On the entry side, we need programs that quickly and credibly certify “operator-with-AI” competence. Manufacturing already sets an example. With robots at record scale and software guiding the production line, short, modular training can prepare graduates to operate systems that once required years of implicit knowledge. Real-time decision support, simulation-based training, and built-in diagnostics reduce the time it takes new hires to become productive. Community colleges and technical institutes that collaborate with local employers to design “Level 1 Operator (AI-assisted)” certificates will broaden access while addressing genuine demand.

The office counterpart is just as practical. Instead of prohibiting AI from assignments and then hoping for honesty, instructors should require paired submissions: a human-only baseline followed by an AI-assisted revision with a brief error log. This approach preserves practice in core skills while teaching students to view systems as amplifiers rather than crutches. It also instills the meta-skills that employers value but often do not assess: prompt management, fact verification, and iterative critique. Early field results indicate that novices benefit most from this structure; schools can gain a similar advantage by incorporating this scaffold into their rubrics.

For the mastery track, universities should shift focus toward governance literacy and system integration. Capstone projects should include model selection and evaluation under constraints, robustness testing, and comprehensive documentation that can be audited by a third party. Practicums can use real data from operations (help desks, registrars, labs) with explicit permissions and guidelines, allowing students to study not only performance improvements but also potential failures. Employers already indicate that adoption, not invention, is the primary barrier; surveys across industries show that enthusiasm outpaces readiness, with leadership, skills, and change management identified as key obstacles. This is a problem education can solve—if curricula are allowed to evolve at the pace of deployment rather than the pace of textbook cycles.

There is also a role for public policy to ensure that the floor rises effectively. Two main approaches stand out. First, expand last-mile apprenticeships linked to AI-enabled roles: a semester-long “operator residency” in advanced manufacturing, a co-op in data-supported student services, and a supervised stint in clinical administration using AI for scheduling and triage. Second, build assessment systems that align incentives: state systems could fund verification labs that test whether graduates can manage, monitor, and explain AI-assisted workflows to professional standards. These are foundational capacities, akin to welding booths or nursing mannequins from an earlier era. They make the invisible visible and certify what truly matters.

Skeptics will raise three reasonable critiques. One concern is that automation may lead to deskilling: if AI takes over tasks such as grammar or standard coding, will students lose foundational skills? Evidence suggests that when curricula sequence tasks—first unaided, then supported with explicit reflection—skills improve rather than deteriorate. A second critique is that AI adoption in the real world remains inconsistent; most workers currently report little to no use of AI in their jobs. This situation strongly argues for education to bridge the usage gap, enabling early-career workers to drive diffusion. A third critique concerns equity: will the benefits be distributed to those who already have access to better schools and devices? This risk is real; however, studies showing significant effects for novices also indicate that universal access and instruction can help reduce inequality. The challenge for policy is to ensure that this complementarity is broad, not exclusive.

34% productivity gains for the least-skilled workers serve as a reminder that AI’s most apparent benefits are not just for specialists. On the factory floor, software-guided machines allow newer operators to contribute sooner. In the office, embedded co-pilots help transform rough drafts into solid first versions. That is the “lowers the floor” aspect. The raised ceiling means that as standard tasks become faster and more uniform, fundamental value shifts to design, verification, and integration—the judgment calls that automation cannot replace. Education must embrace both sides. It must teach students how to use the tools without losing the craft and to take responsibility for the aspects of work that tools cannot handle. This requires credentialing operator competence early, developing governance mastery later, and measuring both through honest assessments. If we act now, the barbell workforce can be a deliberate policy choice rather than a chance occurrence—expanding opportunities at entry and deepening expertise at the top.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Brynjolfsson, E., Li, D., & Raymond, L. (2025). Generative AI at Work. Quarterly Journal of Economics, 140(2), 889–942.

International Federation of Robotics. (2024, Sept. 24). Record of 4 million robots working in factories worldwide. Press release and global market.

ManufacturingDive. (2025, Apr. 14). Top 3 challenges for manufacturing in 2025: Skills gap, turnover, and AI.

ManufacturingTomorrow. (2025, Aug.). How to Use AI to Close the Manufacturing Skills Gap.

MIT Sloan Ideas Made to Matter. (2024, Nov. 4). How generative AI affects highly skilled workers.

MIT Sloan Ideas Made to Matter. (2025, Mar. 10). 5 issues to consider as AI reshapes work.

Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. Science, 381.

Pew Research Center. (2025, Feb. 25). U.S. workers are more worried than hopeful about future AI use in the workplace; and Workers’ exposure to AI.

De Souza, G. (2025). Artificial Intelligence in the Office and the Factory: Evidence from Administrative Software Registry Data. Federal Reserve Bank of Chicago Working Paper 2025-11; and VoxEU column summary.

Comment