Hiring for the AI Frontier: Why Education Systems Must Rewire Jobs, Not Just Buy Tools

Input

Changed

This article is based on ideas originally published by VoxEU – Centre for Economic Policy Research (CEPR) and has been independently rewritten and extended by The Economy editorial team. While inspired by the original analysis, the content presented here reflects a broader interpretation and additional commentary. The views expressed do not necessarily represent those of VoxEU or CEPR.

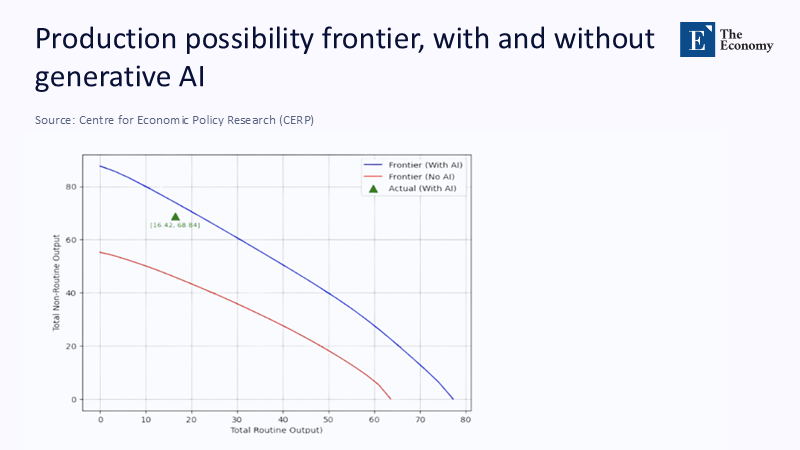

In a randomized workplace experiment inside a European central bank, the need for targeted investments and safeguards in AI was underscored. Opening a single browser tab raised task quality by as much as 44% and cut completion time by roughly 21%. More instructive than the headline numbers was what happened next: when managers simulated nothing more exotic than reassigning tasks to the people who benefited most from the tool, aggregate output climbed by an additional 7.3%—no extra headcount, no overtime, no capital spend. The policy implication is as sharp as it is unfashionable. Generative AI is not only a productivity aid; it is a labor-market re-matcher. It makes previously overlooked candidates competitive if they can work “with” the model, and it frees top performers to spend more time on judgment and coordination. In education systems facing hiring freezes and rising expectations, a technology that both widens the candidate pool and lifts expert productivity is not a gadget; it is civil-service infrastructure in waiting.

From Adoption to Allocation: A Reframing for the Post-Pilot Era

Public debate still oscillates between automation anxiety and augmentation optimism. Both miss the central policy opportunity: 'access plus allocation'. This term refers to the idea that providing access to AI tools is not enough; it's also crucial to allocate these tools effectively. Access matters because the gains are uneven but predictable. Across rigorous field and lab settings, less-experienced staff tend to see the largest quality lift with generative assistants. At the same time, their seasoned colleagues harvest meaningful time savings on cognitively demanding, non-routine work. That asymmetry shifts the hiring signal. Candidates who might once have been screened out for lack of elite prior experience become viable if they can demonstrate reliable, assisted output; incumbents who already excel translate the assistant into cycle-time reductions and scope expansion. This is not a feel-good story—it is a staffing strategy. Evidence from service operations shows average productivity gains of around 14–15%, concentrated among novices; controlled trials on complex knowledge tasks show significant quality and speed improvements across the distribution, albeit with failure on tasks beyond the model’s frontier.

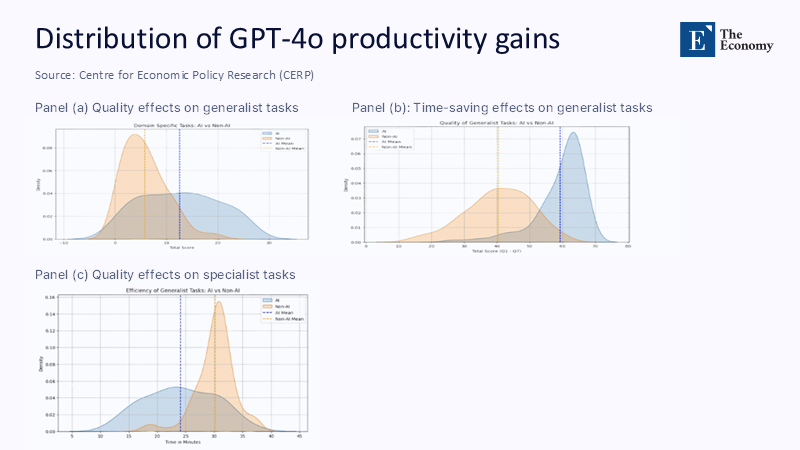

What the Numbers Say—and How to Estimate Real Gains

The best evidence points to mid-teens average productivity effects, with much larger tail gains for specific pairings of task and worker. In a large firm deploying an AI copilot to customer support agents, throughput rose about 14–15% on average, with improvements above 30% for novices and more minor effects for the most experienced agents. In preregistered experiments on mid-level professional writing, completion time fell around 40%, and independent raters scored quality roughly 18% higher. And in a central-bank setting performing routine and domain-specific work, average quality gains reached the 33–44% range and time fell by around a fifth, with the strongest quality lifts for lower-skill staff and the most significant time savings for higher-skill colleagues. A simple, conservative translation for an education ministry: if 30% of white-collar time sits in drafting, synthesis, and analysis tasks and AI reliably lifts performance on those by 15%, system-wide effective capacity rises by ~4.5% before any task reallocation. The counterfactual reallocation dividend—measured at an additional 7.3% in the central-bank experiment—suggests targeted role redesign can plausibly double that aggregate gain.

The German Lesson: Infrastructure, Complementarities, Selection

Europe has seen a version of this story before, with machine tools rather than machine text. The 'German Lesson' refers to East Germany’s rapid convergence toward West German productivity after 1990, which was driven less by single cheques than by infrastructure and institutional complements deployed with ruthless selectivity. Property rights and apprenticeship standards were cloned quickly; capital and managerial talent flowed to plants with demonstrable adaptive capacity; and clusters such as “Silicon Saxony” compounded gains through dense supplier networks and university linkages. Contemporary analyses emphasize that the state prioritized firms likely to translate investment into efficiency and then used cluster policy to lock in spillovers—culminating in today’s semiconductor and battery corridors. That design principle matters now: treating generative AI as infrastructure means funding the rails (secure retrieval layers, curated corpora, connectivity), not just licenses, and selecting initial use-cases where the institution has absorptive capacity. Germany’s recent AI policy reviews reach a parallel conclusion: complementary investments and governance—not gadgets—determine whether adoption stalls or scales.

Implications for Schools, Universities, and Ministries

For educators, the near-term task is capability equalization. Establish a baseline AI fluency—prompt hygiene, retrieval grounding, source citation, and red-teaming for bias—and shift assessment toward reasoning under uncertainty, critique, and oral defense. For administrators, the imperative is task redesign. Map routine versus non-routine activities across teams, then channel first-drafting, summarization, and synthesis toward those who realize the most significant quality boost with assistance, reserving scarce expert hours for review and decision. Build workflows that assume AI is fallible: require claim-evidence tables, citations to retrievable sources, and cross-checks by peers. Ministries can underwrite the standard rails—procurement frameworks, privacy-preserving data environments, and secure retrieval layers—so institutions can plug models into vetted knowledge rather than the open web. Where budgets are tight, prefer shared services over fragmented licenses; a national retrieval gateway serving curricular and research content will do more for equity than hundreds of small deployments. International guidance now offers practical guardrails for doing precisely this.

Answering the Obvious Objections: Accuracy, Dependence, Equity

Skeptics are right to worry about reliability. Large models still hallucinate, and ungrounded legal and technical answers can be wrong with unwelcome confidence. Peer-reviewed evaluations in law show high error rates without retrieval; industry and editorial analyses confirm that hallucinations remain a live risk even as model families improve. The remedy is neither abstinence nor blind faith, but layered safeguards: retrieval-augmented generation to anchor claims in sources, structured prompts with explicit reasoning checks, and mandatory human review for high-stakes outputs. A second concern is skill atrophy. The antidote is curricular: alternate “AI-off” and “AI-on” assignments, require distinctive oral defenses, and make attribution and version control part of grading—finally, equity. Adoption has stagnated in some sectors even as frontier institutions accelerate; early workplace studies show gains skewing toward newcomers when tools are deployed well. Public financing of shared retrieval gateways, open reference corpora, and universal training is the most cost-effective way to ensure benefits accrue across schools and regions—not just to those already ahead.

A Playbook for Leaders: Treat AI as Shared, Measured Infrastructure

The quickest path to durable gains is to treat AI like a network: shared, reliable, and metered. First, universal access with differentiated support—everyone gets the tool; newcomers get scaffolds and exemplars; experts get workflow automations and retrieval hooks. Second, data-guided allocation—after one quarter, measure quality and cycle time by task type with and without assistance, then reassign work so people spend more time where their AI-augmented comparative advantage is largest. The analogy is workforce planning, not software rollout. Third, milestone-tied funding—release budgets for licenses and compute only when units hit agreed accuracy (via blind raters), cycle time, and satisfaction targets. A lightweight “model-of-record” policy reduces operational risk: name the supported models and retrieval sources for each workflow, with sunset dates and replacement criteria. None of this is hypothetical; European employers report growing investment intent alongside governance and enablement as the binding constraints, and euro-area policymakers are already tracking adoption and employment effects.

Build the Ramp, Raise the Roof

We opened with a simple but radical finding: give people a competent assistant and they not only work faster and better, they also reshuffle into roles where their strengths matter more—unlocking measurable gains without new spending. That is the opportunity education should seize. Treat generative AI as shared infrastructure, fix reliability with retrieval and review, credential the skills that make assistance safe and valuable, and reorganize work so the right people do the right tasks at the right time. If we build the ramp—standard rails, shared corpora, universal training—we invite in candidates who would once have been dismissed. If we raise the roof—role design, incentives, and safeguards—we let experts reach farther. The payoff is not an abstract promise but a concrete productivity uplift already visible in controlled settings. The task now is execution. Fund the rails, credential the skills, measure the gains, and reassign work to where human judgment plus machine assistance is most potent.

The original article was authored by Aleš Maršál and Patryk Perkowski. The English version of the article, titled "Task-based returns to generative AI: Evidence from a central bank," was published by CEPR on VoxEU.

References

Brynjolfsson, E., Li, D., & Raymond, L. (2024). Generative AI at Work. Quarterly Journal of Economics, 140(2), 889–932.

Dell’Acqua, F., McFowland III, E., Mollick, E., Lifshitz-Assaf, H., Kellogg, K., Rajendran, S., Krayer, L., Candelon, F., & Lakhani, K. (2023). Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality. HBS Working Paper 24-013.

European Central Bank (ECB). (2025, March 21). AI adoption and employment prospects. ECB Blog.

Gkagkosi, N. (2025, June 6/July 1 update). Productivity Is the Real Border: Re-Reading East Germany’s Convergence Miracle for a Post-Pandemic Europe. The Economy (economy.ac).

Hennicke, M., Lubczyk, M., & Mergele, L. (2025, June 5). Industrial policy lessons from East Germany’s privatisation. VoxEU/CEPR.

IAB-Forum (Institute for Employment Research). (2025, May). Artificial intelligence in the workplace: insights into the transformation of customer services.

KPMG Germany. (2025, April). Study: Generative AI in the German economy in 2025.

Maršál, A., & Perkowski, P. (2025, July 31). Task-based returns to generative AI: Evidence from a central bank. VoxEU/CEPR.

Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative AI (ChatGPT). Science, 381(6654), 187–192.

OECD. (2024, June). OECD Artificial Intelligence Review of Germany. Paris: OECD Publishing.

UNESCO. (2023; last updated 2025). Guidance for generative AI in education and research. Paris: UNESCO.

ZEW Mannheim. (2024, September). AI Adoption Stagnates in German Companies (press release).

Zhang, W., & Noy, S. (2023). Experimental evidence on the Productivity Effects of Generative AI (Working Paper version). MIT Economics.

Stanford Digital Humanities & Law (DHO). (2025). Free? Assessing the Reliability of Leading AI Legal Research Tools. Working paper.

Financial Times. (2025, July). The ‘hallucinations’ that haunt AI: why chatbots struggle to tell the truth.

Comment